To many people, the self-help movement—with its positive self-talk, daily feel-good affirmations, and emphasis on vague concepts like “gratitude” and “acceptance”—seems like cheesy psychobabble. Take, for instance, Al Franken’s fictional early-1990s SNL character Stuart Smalley: a perennially cheerful, cardigan-clad “member of several 12-step groups but not a licensed therapist,” whose annoyingly positive attitude mocked the idea that personal suffering could be overcome with absurdly simple affirmative self-talk.

Stuart Smalley was clearly a caricature of the 12-step movement (in fact, many of his “catchphrases” came directly from 12-step principles), but there’s little doubt that the strategies he espoused have worked for many patients in their efforts to overcome alcoholism, drug addiction, and other types of mental illness.

Twenty years later, we now realize Stuart may have been onto something.

A review by Kristin Layous and her colleagues, published in this month’s Journal of Alternative and Complementary Medicine, shows evidence that daily affirmations and other “positive activity interventions” (PAIs) may have a place in the treatment of depression. They summarize recent studies examining such interventions, including two randomized controlled studies in patients with mild clinical depression, which show that PAIs do, in fact, have a significant (and rapid) effect on reducing depressive symptoms.

A review by Kristin Layous and her colleagues, published in this month’s Journal of Alternative and Complementary Medicine, shows evidence that daily affirmations and other “positive activity interventions” (PAIs) may have a place in the treatment of depression. They summarize recent studies examining such interventions, including two randomized controlled studies in patients with mild clinical depression, which show that PAIs do, in fact, have a significant (and rapid) effect on reducing depressive symptoms.

What exactly is a PAI? The authors offer some examples: “writing letters of gratitude, counting one’s blessings, practicing optimism, performing acts of kindness, meditation on positive feelings toward others, and using one’s signature strengths.” They argue that when a depressed person engages in any of these activities, he or she not only overcomes depressed feelings (if only transiently) but can also can use this to “move past the point of simply ‘not feeling depressed’ to the point of flourishing.”

Layous and her colleagues even summarize results of clinical trials of self-administered PAIs. They report that PAIs had effect sizes of 0.31 for depressive symptoms in a community sample, and 0.24 and 0.23 in two studies specifically with depressed patients. By comparison, psychotherapy has an average effect size of approximately 0.32, and psychotropic medications (although there is some controversy) have roughly the same effect.

[BTW, an “effect size” is a standardized measure of the magnitude of an observed effect. An effect size of 0.00 means the intervention has no impact at all; an effect size of 1.00 means the intervention causes an average change (measured across the whole group) equivalent to one standard deviation of the baseline measurement in that group. An effect size of 0.5 means the average change is half the standard deviation, and so forth. In general, an effect size of 0.10 is considered to be “small,” 0.30 is “medium,” and 0.50 is a “large” effect. For more information, see this excellent summary.]

So if PAIs work about as well as medications or psychotherapy, then why don’t we use them more often in our depressed patients? Well, there are a number of reasons. First of all, until recently, no one has taken such an approach very seriously. Despite its enormous common-sense appeal, “positive psychology” has only been a field of legitimate scientific study for the last ten years or so (one of its major proponents, Sonja Lyubomirsky, is a co-author on this review) and therefore has not received the sort of scientific scrutiny demanded by “evidence-based” medicine.

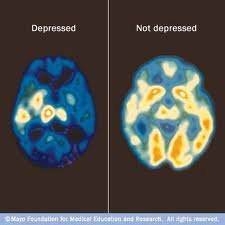

A related explanation may be that people just don’t think that “positive thinking” can cure what they feel must be a disease. As Albert Einstein once said, “You cannot solve a problem from the same consciousness that created it.” The implication is that one must seek outside help—a drug, a therapist, some expert—to treat one’s illness. But the reality is that for most cases of depression, “positive thinking” is outside help. It’s something that—almost by definition—depressed people don’t do. If they were to try it, they may reap great benefits, while simultaneously changing neural pathways responsible for the depression in the first place.

Which brings me to the final two reasons why “positive thinking” isn’t part of our treatment repertoire. For one thing, there’s little financial incentive (to people like me) to do it. If my patients can overcome their depression by “counting their blessings” for 30 minutes each day, or acting kindly towards strangers ten times a week, then they’ll be less likely to pay me for psychotherapy or for a refill of their antidepressant prescription. Thus, psychiatrists and psychologists have a vested interest in patients believing that their expert skills and knowledge (of esoteric neural pathways) are vital for a full recovery, when, in fact, they may not be.

Finally, the “positive thinking” concept may itself become too “medicalized,” which may ruin an otherwise very good idea. The Layous article, for example, tries to give a neuroanatomical explanation for why PAIs are effective. They write that PAIs “might be linked to downregulation of the hyperactivated amygdala response” or might cause “activation in the left frontal region” and lower activity in the right frontal region. Okay, these explanations might be true, but the real question is: does it matter? Is it necessary to identify a mechanism for everything, even interventions that are (a) non-invasive, (b) cheap, (c) easy, (d) safe, and (e) effective? In our great desire to identify neural mechanisms or “pathways” of PAIs, we might end up finding nothing; it would be a shame if this result (or, more accurately, the lack thereof) leads us to the conclusion that it’s all “pseudoscience,” hocus-pocus, psychobabble stuff, and not worthy of our time or resources.

At any rate, it’s great to see that alternative methods of treating depression are receiving some attention. I just hope that their “alternative-ness” doesn’t earn immediate rejection by the medical community. On the contrary, we need to identify those for whom such approaches are beneficial; engaging in “positive activities” to treat depression is an obvious idea whose time has come.

Posted by stevebMD

Posted by stevebMD