Do antipsychotics treat PTSD? It depends. That seems to be the best response I can give, based on the results of two recent studies on this complex disorder. A better question, though, might be: what do antipsychotics treat in PTSD?

One of these reports, a controlled, double-blinded study of the atypical antipsychotic risperidone (Risperdal) for the treatment of “military service-related PTSD,” was featured in a New York Times article earlier this month. The NYT headline proclaimed, somewhat unceremoniously: “Antipsychotic Use is Questioned for Combat Stress.” And indeed, the actual study, published in the Journal of the American Medical Association (JAMA), demonstrated that a six-month trial of risperidone did not improve patients’ scores in a scale of PTSD symptoms, when compared to placebo.

One of these reports, a controlled, double-blinded study of the atypical antipsychotic risperidone (Risperdal) for the treatment of “military service-related PTSD,” was featured in a New York Times article earlier this month. The NYT headline proclaimed, somewhat unceremoniously: “Antipsychotic Use is Questioned for Combat Stress.” And indeed, the actual study, published in the Journal of the American Medical Association (JAMA), demonstrated that a six-month trial of risperidone did not improve patients’ scores in a scale of PTSD symptoms, when compared to placebo.

But almost simultaneously, another paper was published in the online journal BMC Psychiatry, stating that Abilify—a different atypical antipsychotic—actually did help patients with “military-related PTSD with major depression.”

So what are we to conclude? Even though there are some key differences between the studies (which I’ll mention below), a brief survey of the headlines might leave the impression that the two reports “cancel each other out.” In reality, I think it’s safe to say that neither study contributes very much to our treatment of PTSD. But it’s not because of the equivocal results. Instead, it’s a consequence of the premises upon which the two studies were based.

PTSD, or post-traumatic stress disorder, is an incredibly complicated condition. The diagnosis was first given to Vietnam veterans who, for years after their service, experienced symptoms of increased physiological arousal, avoidance of stimuli associated with their wartime experience, and continual re-experiencing (in the form of nightmares or flashbacks) of the trauma they experienced or observed. It’s essentially a re-formulation of conditions that were, in earlier years, labeled “shell shock” or “combat fatigue.”

PTSD, or post-traumatic stress disorder, is an incredibly complicated condition. The diagnosis was first given to Vietnam veterans who, for years after their service, experienced symptoms of increased physiological arousal, avoidance of stimuli associated with their wartime experience, and continual re-experiencing (in the form of nightmares or flashbacks) of the trauma they experienced or observed. It’s essentially a re-formulation of conditions that were, in earlier years, labeled “shell shock” or “combat fatigue.”

Since the introduction of this disorder in 1980 (in DSM-III), the diagnostic umbrella of PTSD has grown to include victims of sexual and physical abuse, traumatic accidents, natural disasters, terrorist attacks (like the September 11 massacre), and other criminal acts. Some have even argued that poverty or unfortunate psychosocial circumstances may also qualify as the “traumatic” event.

Not only are the types of stressors that cause PTSD widely variable, but so are the symptoms that ultimately develop. Some patients complain of minor but persistent symptoms, while others experience infrequent but intense exacerbations. Similarly, the neurobiology of PTSD is still poorly understood, and may vary from person to person. And we’ve only just begun to understand protective factors for PTSD, such as the concept of “resilience.”

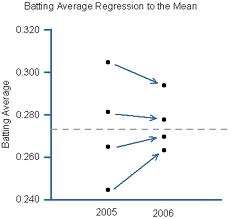

Does it even make sense to say that one drug can (or cannot) treat such a complex disorder? Take, for instance, the scale used in the JAMA article to measure patients’ PTSD symptoms. The PTSD score they used as the outcome measure was the Clinician-Administered PTSD Scale, or CAPS, considered the “gold standard” for PTSD diagnosis. But the CAPS includes 30 items, ranging from sleep disturbances to concentration difficulties to “survivor guilt”:

It doesn’t take a cognitive psychologist or neuroscientist to recognize that these 30 domains—all features of what we consider “clinical” PTSD—could be explained by just as many, if not more, neural pathways, and may be experienced in entirely different ways, depending upon on one’s psychological makeup and the nature of one’s past trauma.

In other words, saying that Risperdal is “not effective” for PTSD is like saying that acupuncture is not effective for chronic pain, or that a low-carb diet is not an effective way to lose weight. Statistically speaking, these interventions might not help most patients, but in some, they may indeed play a crucial role. We just don’t understand the disorders well enough.

[By the way, what about the other study, which reported that Abilify was helpful? Well, this study was a retrospective review of patients who were prescribed Abilify, not a randomized, placebo-controlled trial. And it did not use the CAPS, but the PCL-M, a shorter survey of PTSD symptoms. Moreover, it only included 27 of the 123 veterans who agreed to take Abilify, and I cannot, for the life of me, figure out why the other 96 were excluded from their analysis.]

Anyway, the bottom line is this: PTSD is a complicated, multifaceted disorder—probably a combination of disorders, similar to much of what we see in psychiatry. To say that one medication “works” or another “doesn’t work” oversimplifies the condition almost to the point of absurdity. And for the New York Times to publicize such a finding, only gives more credence to the misconception that a prescription medication is (or has the potential to be) the treatment of choice for all patients with a given diagnosis.

Anyway, the bottom line is this: PTSD is a complicated, multifaceted disorder—probably a combination of disorders, similar to much of what we see in psychiatry. To say that one medication “works” or another “doesn’t work” oversimplifies the condition almost to the point of absurdity. And for the New York Times to publicize such a finding, only gives more credence to the misconception that a prescription medication is (or has the potential to be) the treatment of choice for all patients with a given diagnosis.

What we need is not another drug trial for PTSD, but rather a better understanding of the psychological and neurobiological underpinnings of the disease, a comprehensive analysis of which symptoms respond to which drug, which aspects of the disorder are not amenable to medication management, and how individuals differ in their experience of the disorder and in the tools (pharmacological and otherwise) they can use to overcome their despair. Anything else is a failure to recognize the human aspects of the disease, and an issuance of false hope to those who suffer.

Posted by stevebMD

Posted by stevebMD